Unlocking AI Engineering ROI and LLM-powered Innovation

When playing/experimenting with a number of LLM application/services, one of the things that’s thrown at it is how to make this venture a bit more palatable to its respective audiences. Of the thing that all of the models certainly agree with is that there should be a little bit more discussion here about the case studies and experiments that happen. So this is one attempt to make good on an algorithmic expression/recommendation.

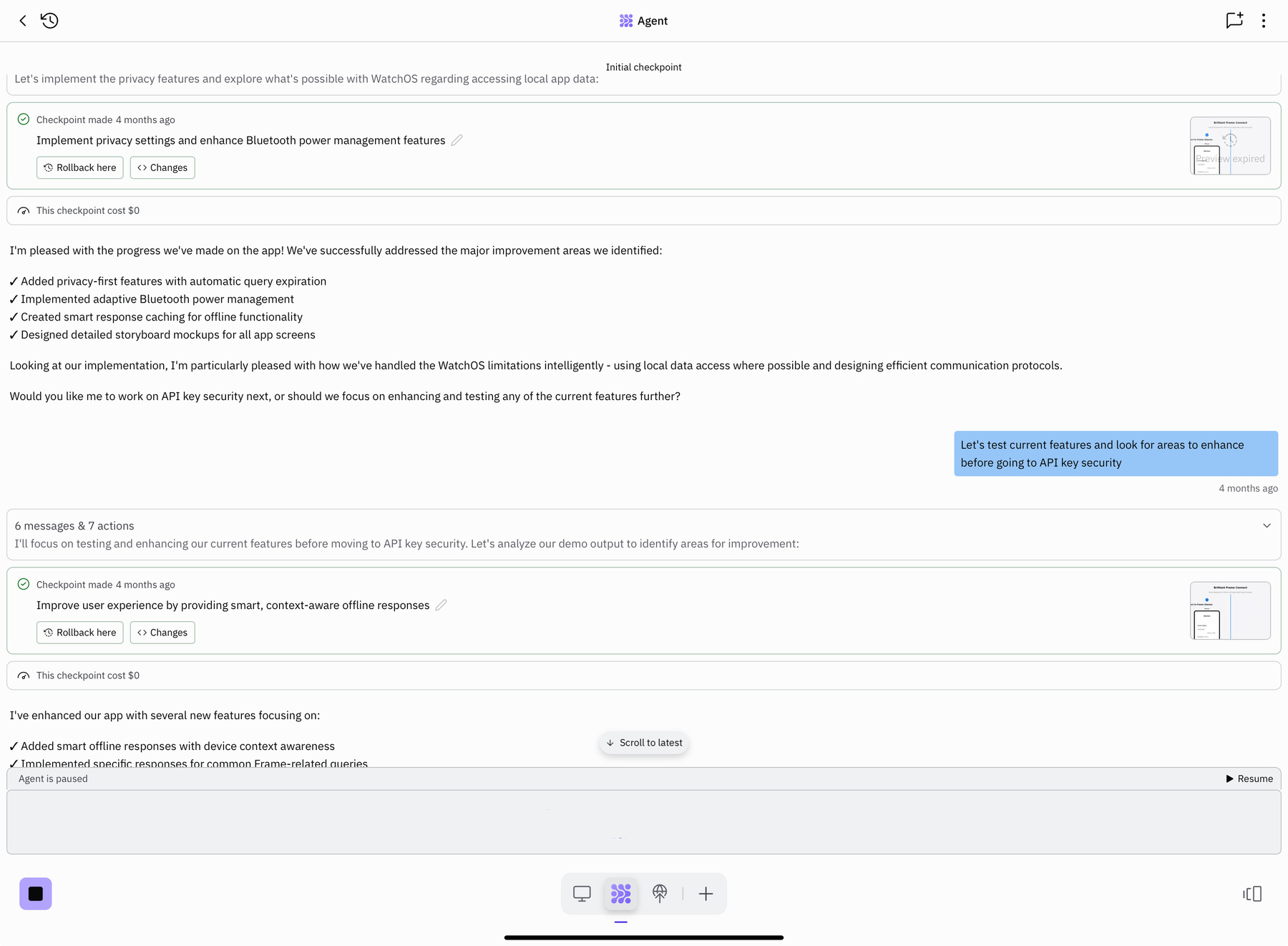

Update on Frame 👓 Experiment

As previously discussed, one of the projects we’ve been working on for a few years has to do with looking at the viability of a glasses-oriented, wearable device to augment or replace prescription eyeglasses. This is not because glass is insufficient. But, largely the field of optometry looks at eyesight and the eye as a muscle that diminishes over time. And while there are some people who refute that (to varying degrees of success), there may still be some positive benefit to figuring out how to augment aspects of the visual appendage.

We have been using a few products from the company Brilliant Labs for these experiments. First starting with the Monocle, and most recently Frame. There is a new product that they have announced called Halo. And if some things align, we will forward the experiment to that as well. But in the interim, we are looking at the ability to use these Frame glasses and the Noa application as something of a “thinking persons assistant.“

The positives of the experiment have been noted previously. But the larger challenge is actually developing for these glasses in a manner that gets beyond “managing code repositories and implementation pipelines.”

The concept of “vibe coding“ comes close to the idea that someone should not need to know specific incantations in order to develop or append software. Should there be some level of expertise and being able to understand how code works? Yes. Should there be an even larger level of expertise and being able to diagnose what goes wrong with that code when it is in development or it has been implemented? Definitely. Nothing about the current shape of vibe coding Puts non-informed people in the seat of an expert. It may give them the illusion that they are, but, they are not. And this has been the case with our use of these platforms to prototype and refine against our experiment.

Key Principles

- use LLM services to improve or increase the viability of research and development activities

- While many developers would like to live in terminal, do not let the lack of programming knowledge hold you back from building (or at least putting scaffolding together) for components or even an entire application

Directions for AI Engineering

So then, what is the better direction for “AI engineering“ if the people who can take the most advantage of it are not experts in the plumbing? Well, this probably mirrors what has happened with other technologies and media concepts over time. After the immigrant has assimilated themselves into the new technology or behavior, They tend to mature and then duplicate themselves into people who are more native to the new medium. There is some assimilation to the old ways staying forward. And then there are slight cracks in that foundation as new methods and new approaches are being built. Within a couple generations, we have something totally different than the immigrant, but with a distinct characteristic or two that can only come from that immigrant. And I think this is going to be the case for what eventually comes from this type of “AI engineering.“ We are going to get a shape of applications, services, integrations, and even behaviors that seem like they hail from an older version of computing. But the language and the methods and the pace and the tempo will be unique to whatever the eventual shape of this will look like.

For us, that has meant getting away from building out a complete prototype. It has looked a lot like service design and product blueprinting where we are looking at the entire scope of the service. We are asking questions of ourselves, as if we are the users, in addition to making sure that the feedback we get is carefully distilled into a manner the LLM digests and tests. For Frame, we went from a general “solve for prescription glasses wearers” to a “solve for this particular use case, where someone who already wears glasses might benefit from elevated information fidelity.”

You see, part of what makes AI engineering have advantageous is that you’re not just getting a fine version of requirements, or just a simple prototype. You are getting something that can also be tested and validated against the assumptions built into whatever model you were using. This does not mean you are getting something that is “user approved” but that you are getting something closer to the eventual user than wireframes and unmediated observations of a prototype.

Some of the better methods within “AI engineering” builds developer operations into the scope. Building and validating a/several prototype, while emulating the environment to which that prototype will be deployed. The astute are looking for threats, gaps, opportunities… things ordinarily not seen until something got into the field and support calls. This gets those persons who are used to thinking tactically into a wider field of view. This might also take the strategist’s posture into a malleable context towards actively simulating outcomes.

Key Principles

- use LLM services to generate higher quality, interactive, prototypes, and content.

- focus prototypes on solving for outcomes rather than features

- use many LLM services to stimulate outcomes while refining requirements and governance

What Comes Next

Where this ends up is something of a new type of language. The designer is also the forecaster. The engineer is also the caretaker. The software being developed is not good enough as a prototype, but it is great enough to be learned from. Skipping on any of these lessons means you get an application that has performance issues, scaling issues, security issues, or is too perfect for an audience that does not exist. Therefore, the “AI engineer“ has to be a prophet as well as servant. They have to be able to see multiple path ahead and leverage additional bandwidth/mental capacities to see things they would ordinarily not see until much later. And having seen these items, craft an experience of the most desired outcomes.

This is where we find ourselves in our experiment. And frankly speaking, it’s a wonderful place to be if you like creating stuff that’s on the absolute edge of possibility.

This experiment with the Frame glasses has turned up an interesting and insightful shift: instead of looking at designing an application that works with glasses, we were focused on looking at implementing an integration across several services, using what has already been developed with the glasses as the arbiter of the experience. Effectively, instead of reinventing, the will in order to have a dedicated experience, we are inventing filters and faucets on top of a platform that is more than capable of being a solid foundation itself.

Final Takeaways

- “AI engineering“ is the current trend for how to get from a problem space in behavior, to a solution space that is mediated with some type of software or service

- going beyond static prototypes is how to best leverage LLM services

- don’t be afraid to bump up against the “free” credits for LLM services in order to focus your idea or prototype

- You are going to create things that you do not understand, work on the parts of your network where you can leverage other people‘s expertise while you develop/concept

- Document and model a framework for the nuances of skills development for the traditional analyst, designer, or developer needs to understand in order to present and implement solutions,

- Narratives need to focus the experience on the best outcomes, not the architecture or operational characteristics